Hypothetically Speaking – Importance of Testing

The importance of mathematically constructing confidence in your opinions

With the recent slew of hacks, the blockchain space is closely looking at more robust methods of testing code and following strong cybersecurity practices. Though setting up test cases is a part of coding 101, analysts use historical data beautifully to test out potential theses. Let’s walk through some examples:

Hypothesis: Increased GitHub Commits Imply Imminent Token Pump

The rationale behind this is simple. The development team pushes code to GitHub. If there is ‘more’ code being committed to the project’s repo might mean that there is an upcoming feature release. This would increase market confidence in the project and hence the token price would pump. Essentially using GitHub commits as a proxy for tech development of the project.

Let’s test this out.

- We define ‘more’ commits as the number of commits today being in the top 75th percentile of all historical commits.

- We take a portfolio of 6 similar tokens, say alt L1s.

- If the number of GitHub commits in one day is high (top 75% of all days), then buy and hold that asset for 14 days.

- Otherwise, hold all six cryptoassets equally.

The team at TIE did perform this analysis back in 2021 and they found that this GitHub strategy does outperform a simple buy-and-hold. However, perhaps it was luck? To test out the robustness of this strategy, the team randomly traded these six assets and outperformed the commit-based strategy about 20% of the time. The end result of the study was that GitHub commits do not have a clear impact on token price. Some commits are minor bug fixes and document updation. GitHub commits are not tradable alpha.

Hypothesis: Institutional Capital Yield Farms

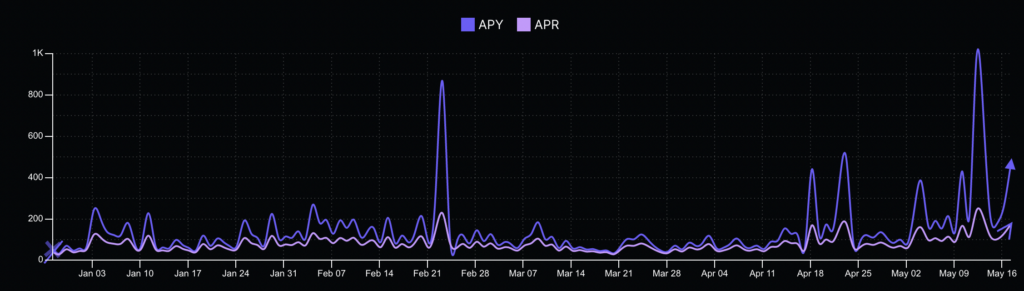

Nansen has labeled wallets of popular crypto firms like Polychain, Mechanism, Alameda, 3AC (rest in peace), TRGC, Framework, etc. Let’s track Alameda’s journey with xSUSHI

Data shows that Alameda staked xSUSHI In Jan and Feb ’21 when the rates were around 20% APY. They held no xSUSHI in their wallet from Feb to mid-April ’21 but then began to buy back when the APY started rising. Wallet tracking establishes that Alameda does yield farm and optimizes for high ROI.

This is just one example. Depending on the level of confidence required, the analyst would have to repeat this data crunching for different firms and different assets.

Hypothesis: No VC Stake in Token Distribution is better for prices

The YFI token was launched with 0 supply and a hard-cap of 30,000. The Nansen dashboard indicates that institutions did not hold YFI for long periods of time but they did trade the token. For example, Polychain bought YFI in Oct ’20 and sold it in Jan ’21 after the price of the token and TVL of the protocol had increased significantly. They again traded the token between Jan ’21 and May ’21 and reduced their position at the end as staking rewards went to 2%.

The pre-mine free launch of YFI has resulted in a lack of long-term institutional holders, however, in ROI terms, the token had performed well before Andre quit deFi. This leads us to believe that while it is good to have long term investors supporting a token, a project with strong fundamentals can indeed perform well.

The Importance of Testing

The crypto ecosystem is still building out it’s research capabilities and I believe that creating test cases and back testing hypothesis with data is a poweful tool for most analysts. Furthermore, doing napkin maths about diverse problems like figuring out the amount of electricity wasted in Malaysian Airbnbs is a fun way of building out a generalist mindset that can draw analogies from different fields and solve problems in a creative way.

For example, earlier this week, my friend Jaski and I guess-timated the revenue we could’ve earned by buying NFTs of my top 10 Spotify songs. While working through the piece we realized the limitations of our data and the degree of faults in our assumptions, nonetheless, we still had:

- a rough range for the revenue number

- a barebones framework for calculating the fair price of these NFTs.

These exercises are important as we build the economic models for web3 and I encourage the reader to build up their own mathematical models for ‘why BTC will be a US$ 10tn asset’.

Until next time…